Getting customer insights with user tests

.png)

.png)

It’s hard to get something right the first time you try it. The New England Patriots had four grueling decades before they won three Super Bowls in four years. Morgan Freeman didn’t land his first major Hollywood role until he was 52.

And this is especially true with software, where the stakes are high. Launching a bad product experience can mean hordes of upset customers, lost revenue and, of course, a waste of your team’s most precious resource: time.

So to mitigate these risks, we turn to user testing. Whenever you want to design a better onboarding ux, you’ll want to incorporate extensive user testing into the scope of the project. Testing the onboarding ux with real users helped HubSpot achieve a 400% lift in one of its KPIs. It saved the team time, and made the user experience less confusing and more valuable.

Get our User Testing toolkit! Including a user testing schedule, interview script, email templates, and more.

Don’t have a UX researcher on your team? Not to worry. All you need to know is how to ask the right people the right questions, and you’ll get some important insights that help move the needle. Here’s everything you need to know about how to run an effective user test (and exactly how HubSpot used user testing to get a 4x increase in tracking code installs).

First, you need a design. It does not necessarily have to be a working piece of software. It can be sketched on paper, in clickable mockups, or whatever medium you want. There are just two requirements:

If you’re designing an onboarding flow, you have a goal: get the user to complete (at least) one meaningful task in your product. For Twitter, this may be following people the user admires, while for Duolingo this may mean starting your first Spanish lesson.

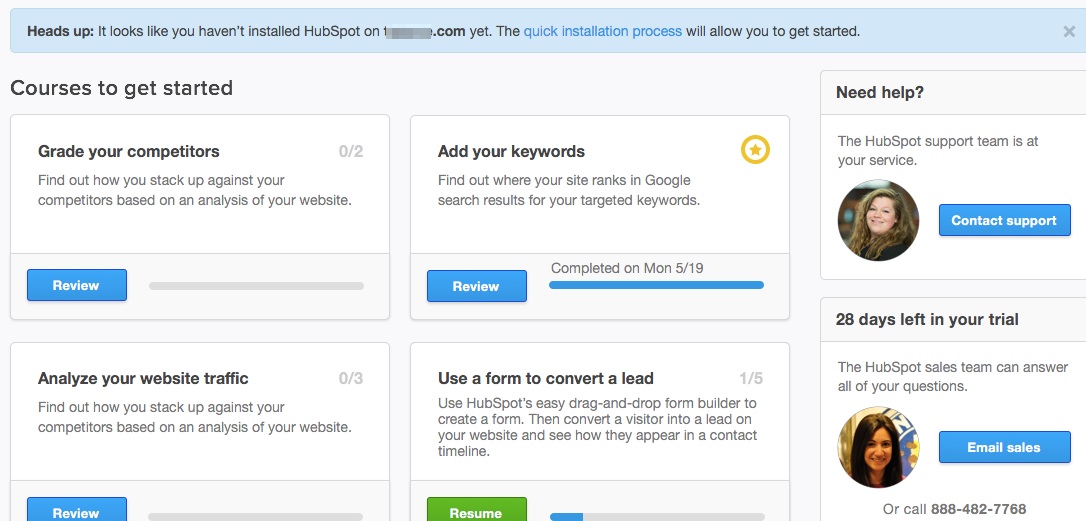

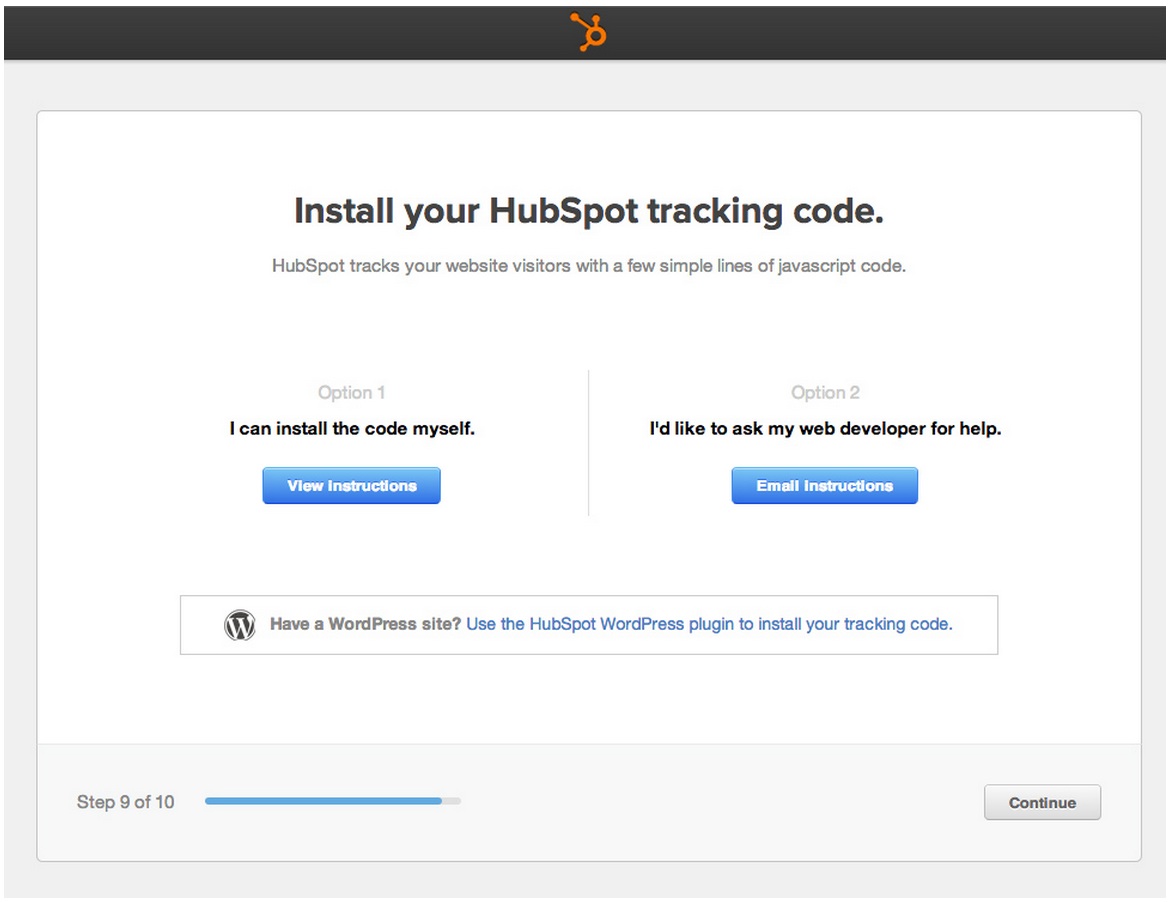

When designing an onboarding ux flow for the HubSpot’s 30-day free trial, our goal was to increase the number of trial users who install the HubSpot tracking code on their site. Our hypothesis was that once they have they tracking code installed they would receive more value, and thus have a higher likelihood of converting into a paid customer. Here’s what the freemium dashboard looked like at the time:

By doing research calls with trial users, we discovered that many of them had no idea what the tracking code was, where to go to install tracking code on their own site, or what the benefit of installing the tracking code would be.

To remedy this, we designed a task in the trial onboarding flow that would teach them why this was important and make it easy to get help with installation. Our goal was to user test this task to learn what was keeping people from installing the HubSpot tracking code, and identify what we can change to increase our conversion between signup and install.

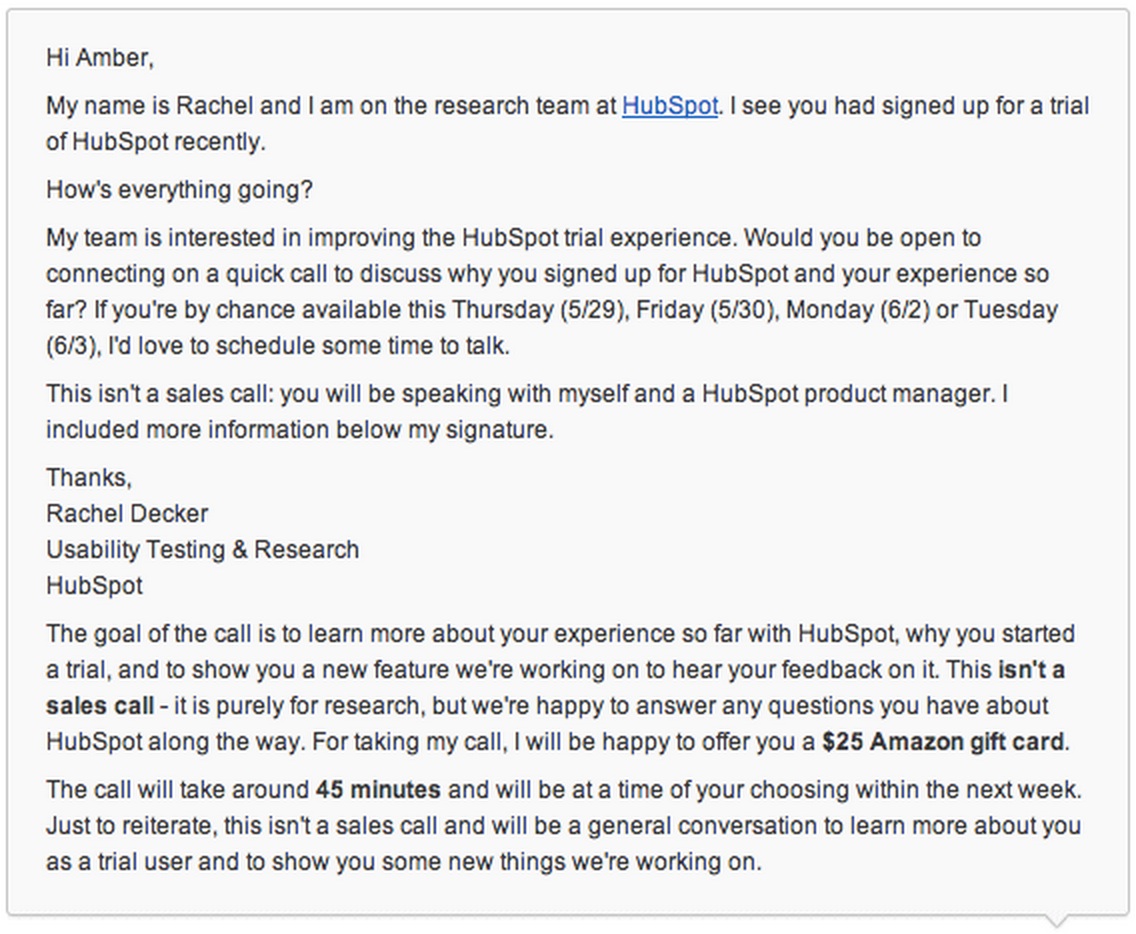

In order to get the right results from your user test, you need to recruit the right users. Google’s Michael Margolis has a great piece on the best way to select participants. For our HubSpot user onboarding designs, we wanted to get feedback from 30 people. To recruit them for the study, we sent emails like this one to unengaged trial users:

There are two important things to note about these emails:

For any substantial change to your product, like a new feature or a new user onboarding ux flow, we recommend you have no fewer than five interviews. You should keep testing until you are no longer surprised with the results. Surprisingly enough, you can expect a high response rate (about 50%) to these emails, so sometimes it’s best to only send 10 at a time until you have your desired number of tests scheduled. Also, remember to follow up after a few days to those who haven’t responded - you’d be surprised how many appointments get scheduled after a second attempt.

At HubSpot, we schedule these calls virtually and use WebEx to run them. There are other software options that will work, but you should look for one that has screensharing, recording and chat capabilities (as well as the ability to switch who is sharing the screen). If you are doing in-person interviews, download Steve Krug’s in-person planning checklist.

For each user test you perform, you’ll want to follow the same process as everyone else in the study. This helps ensure you can compare your observations apples to apples, and draw logical conclusions without bias from your findings. There should be three parts to your script:

First you want to ask basic questions to get to know the user, put him or her at ease, and discover some relevant information about their background with the trial thus far. Here are some of the background questions we used in our HubSpot user test:

Notice that most of these aren’t yes or no questions. They are open-ended and designed to get the person to open up about their experience and expectations.

Next, you want to move into the task-based portion of the interview. Before your user takes any action, you need to set the context. In our HubSpot test, we told one user, “You’ve been doing research on the HubSpot website and decide to start a free trial. You know that you’ve been looking for a way to consolidate all of your marketing to one place. Start the trial and walk through what you are doing.”

We knew this user was looking for one tool to handle all of their marketing from the background questions, and that’s what we use to make the tasks contextual. We could’ve framed the task for another user by saying, “Your boss said you need to sign up for HubSpot and decide whether it’s the best marketing solution. Start the trial and explain what you are looking for. How do you decide that this is the best solution?”

During your task based portion of your interview, ensure that you:

Once you’re ready, share the task with your user and ask them to share their screen. At HubSpot, we give users clickable wireframes made with InVision. But for you, this could be a staging version of your software or even hand drawn mockups.

With each new screen or step in the task, first let the user think out loud and make note of his or her initial impressions. If you think there’s more to learn, there are some questions you can ask (see the user testing toolkit above).

Once you’ve gone through all the tasks, wrap up the interview. Let them know that they’ve reached the end of the tasks, that their feedback was incredibly helpful, thank them for their time and say goodbye. Shortly after the interview, don’t forget to follow up with a thank you email and promised gift.

Since you had each user go through the same set of tasks, it’s time to aggregate and see how many users actually completed those tasks. This could be something as simple as:

It’s also helpful to share questions or comments that came through during testing. For example, we noted things like:

You don’t need a lengthy write up on the findings. Just get your team to agree on what the most important takeaways were, document them, and decide on next steps together. Here is how we document our user testing results at HubSpot.

In conducting these customer interviews, there were three themes that we learned:

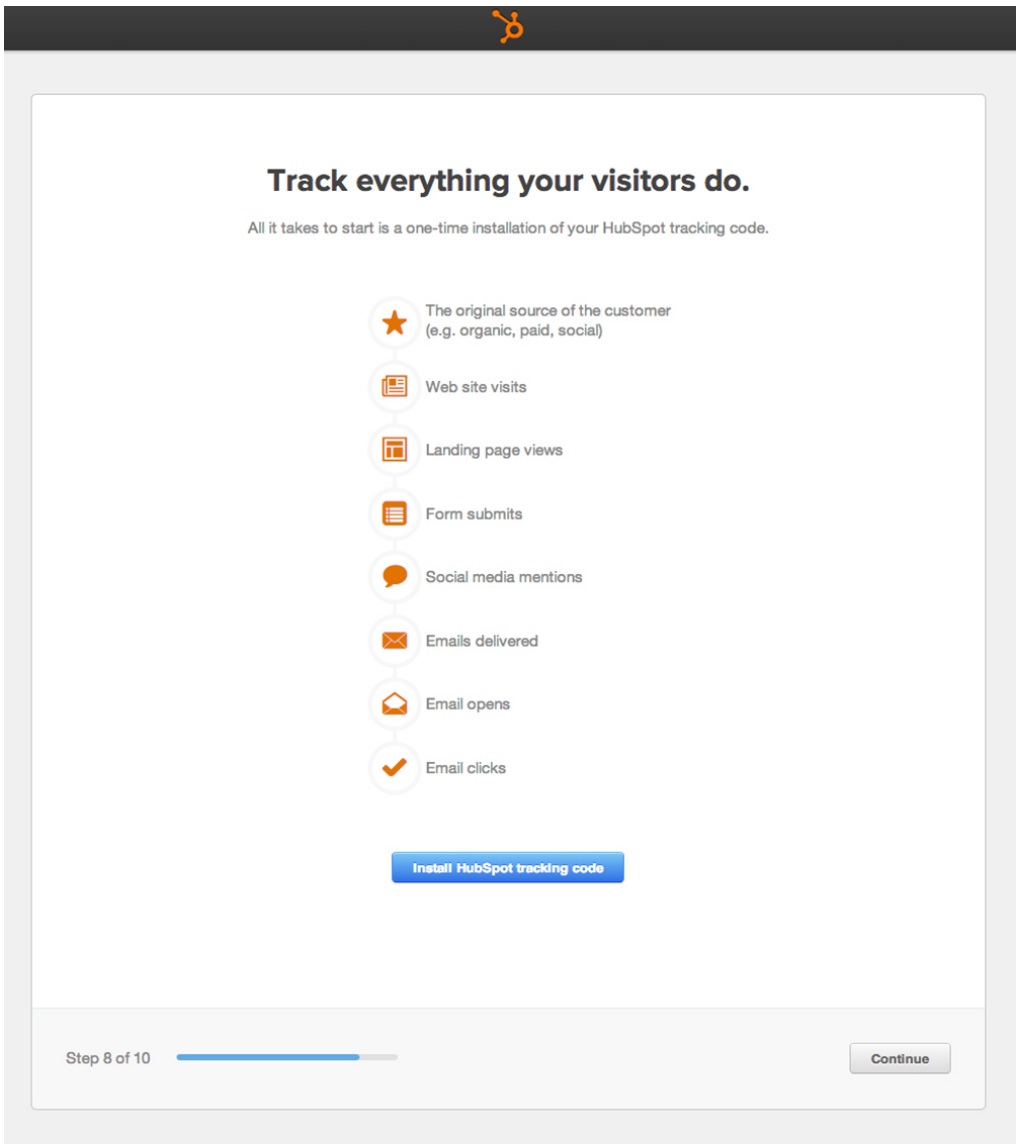

From these observations, we made the following changes to our free trial experience:

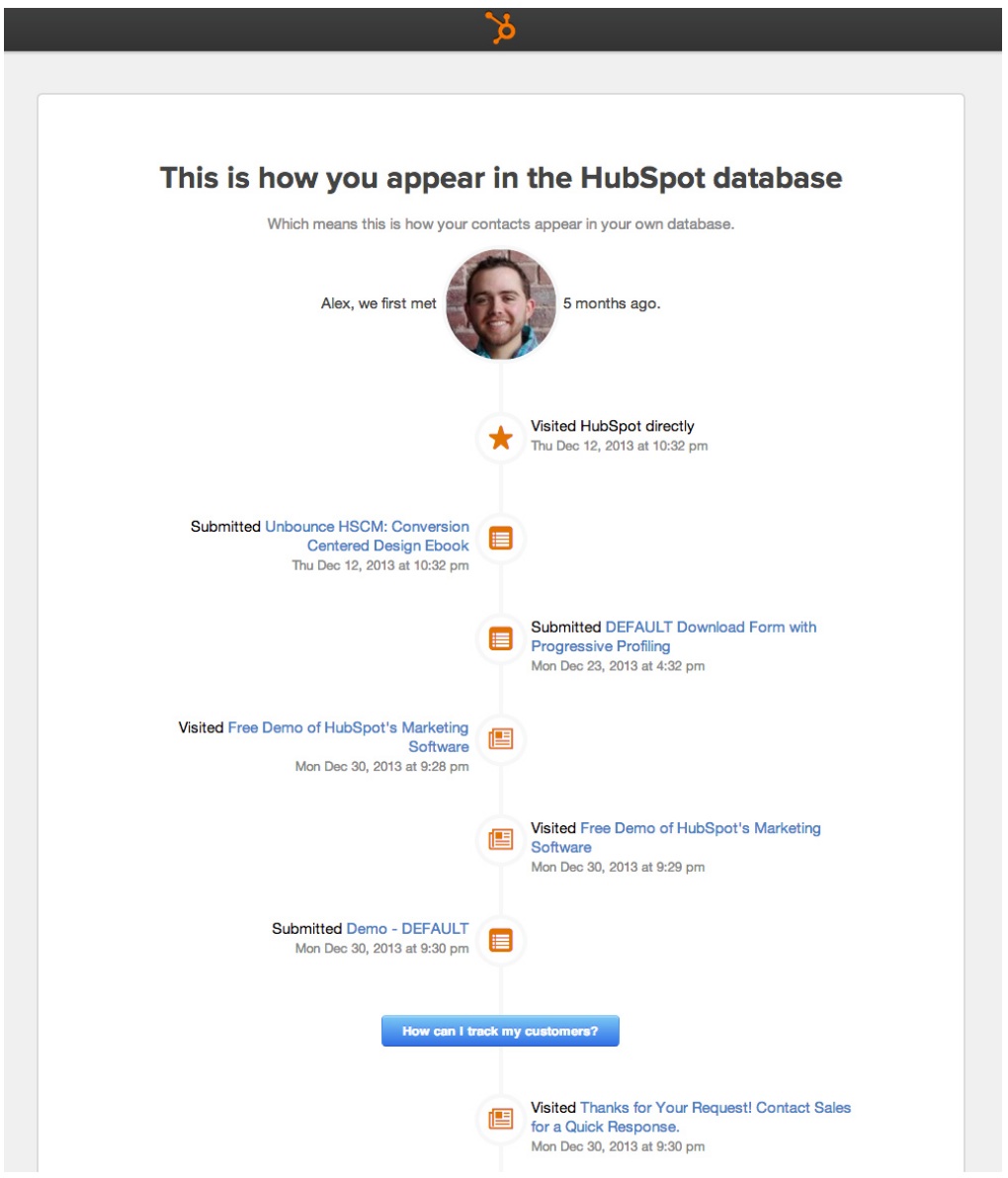

After making these changes, we shipped a variation of the below screens and saw a 400% lift in the number of tracking code installations after one week. All because we solicited feedback on a design before building it out.

Now’s the time to try your hand at user testing your onboarding ux flow. Start with designs, call trial users, and get feedback! Use that feedback to make something even better. You won’t get it right the first time, and maybe not even the 10th time, but keep trying. We’d love to see what you come up with.

Get our User Testing toolkit! Including a user testing schedule, interview script, email templates, and more.

This lesson was written by Rachel from the UX Sisters, a duo of UX researchers at HubSpot who share some incredibly helpful lessons on their blog.